Okay, I apologize for doing this in a reply, but since this paper was published in AAAS Science, this needs a detailed rebuttal. I'll try to summarize as simply as I can why this study is garbage and Science should be ashamed for publishing it. 🧵 https://t.co/4IjRKx4iNb

The hypothesis of the paper is that professionals given writing tasks will complete them faster and with higher quality if assisted by ChatGPT. Seems simple enough. The study had around 500 participants which is a pretty impressive study.

Let's for the sake of argument assume that all of the controls and sample biases were correctly applied and the data is a good statistical sample to draw conclusions from. I did not check extensively but nothing popped out when I downloaded the data: https://t.co/Z8rRIWWXNm

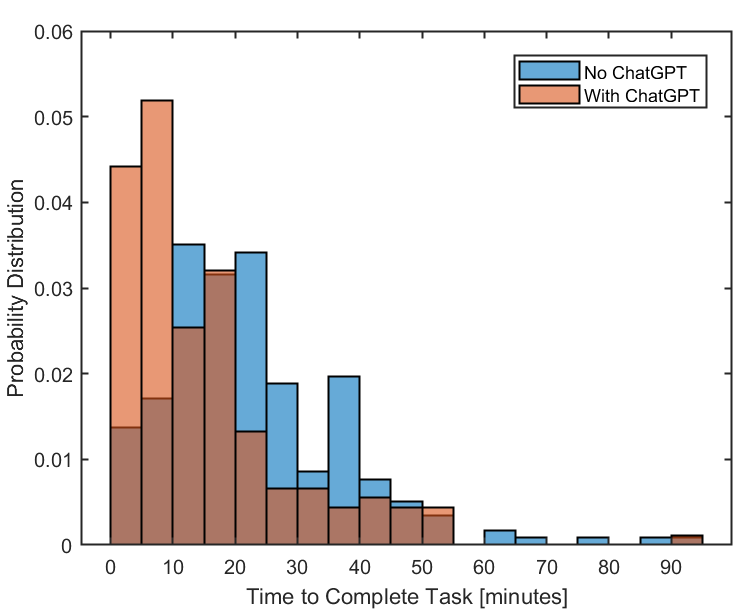

The following is my recreation of their histogram of time to complete task 2, where roughly half had ChatGPT and the other half did not. Without GPT, it took an average of 18 minutes to complete, while with GPT it only took an average of 10 minutes to complete. YAY! 40% faster!

Not so fast! We have to acknowledge that the variance is much larger than the mean, which is commonly true with many studies like this. We need a statistically rigorous way to compare these two populations.

We might naturally gravitate to a two-sided students' t-test, which answers the question "are the two means between these two populations significant?" You can read about it here: https://t.co/70L4DoAel0

But we have to be careful, because a t-test only applies to normally distributed data. This is where most of these types of studies make a mistake, as they do not rigorously check what type of distribution best describes the population under study.

If you apply the t-test on this data as is, you will find that the means are statistically significant with 99.9% confidence. Hey, that sounds really awesome! ChatGPT for the win!

Hold on. The problem here is that most human tasks follow a log-normal distribution, which basically is right-fat tailed in time. There are many examples that show log-normal fits variance of time to complete tasks while normal fails. https://t.co/mRrZhwwGAJ

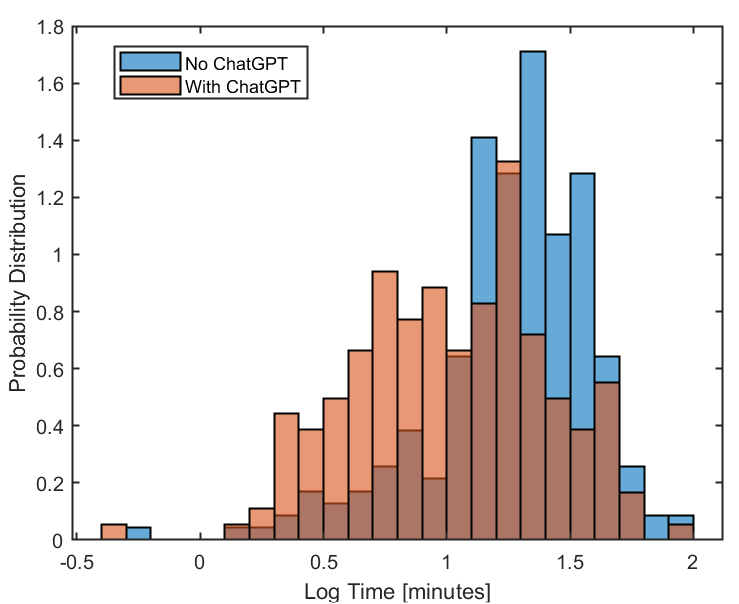

Let's plot this data on a semi-log plot. Hey! Look at those beautiful normal distributions! The no GPT case has a bit of a fat left tail, but we now have our normally distributed data. So what happens if we do a t-test on this bad boy?

It's actually also great, I just wanted to make you suffer through the correct way to do the calculations. the t-score is over 6 which is incredibly significant. So what the heck is the problem? The control.

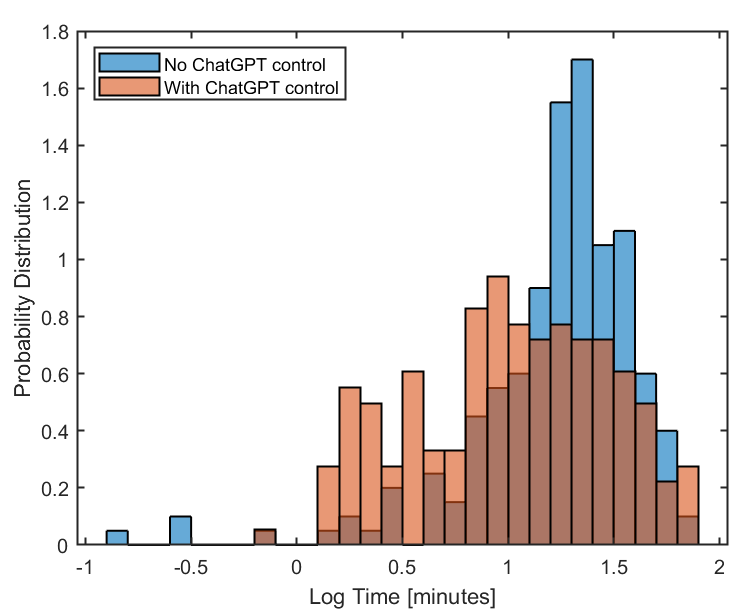

Here is the log-normal plot of the times to complete the control task before asking half the respondents to sign up for and encourage them to use ChatGPT. Oops, the t-score is 4.5, which is also incredibly significant.

This begs the question, what effect did ChatGPT actually have on this test? The no ChatGPT population completed the first task more slowly than the ChatGPT task before anyone was asked to sign up for and use ChatGPT.

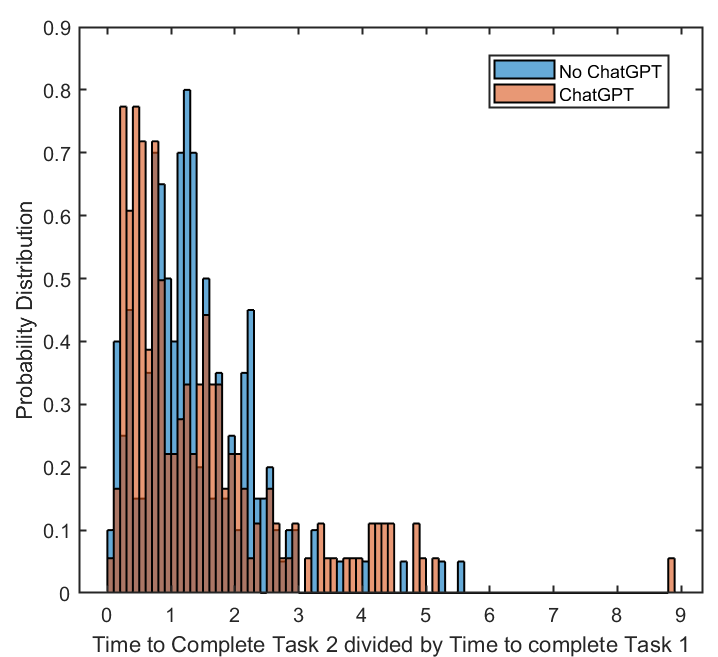

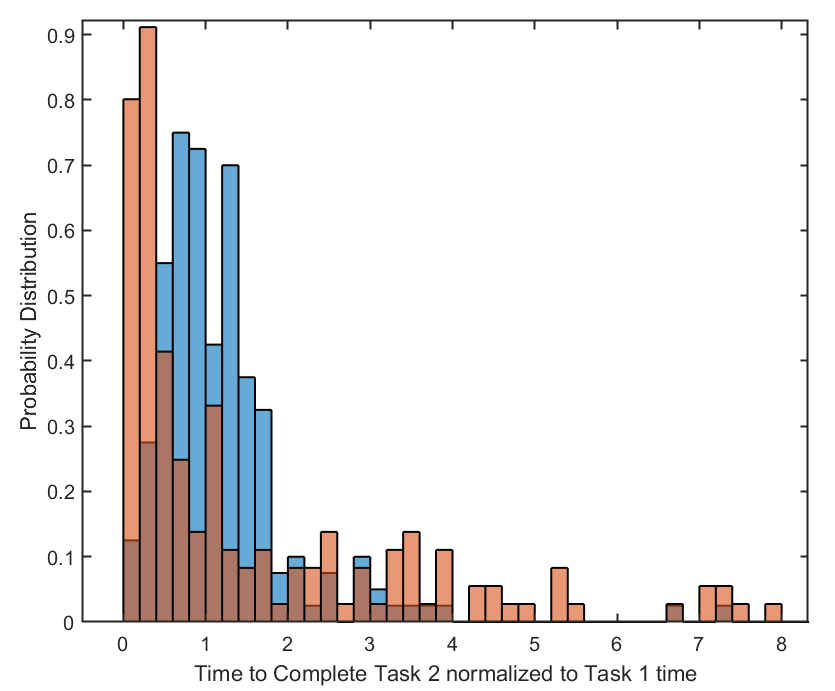

Here's the ratio of the time to complete task 2 normalized by the time to complete task 1, to see how much time it took them to complete task 2 relative to the time it took them to complete task 1. Super interesting.

The folks who did not use ChatGPT had roughly the same ratio of time from task 1 to task 2. Some where faster, some were slower, fairly normally distributed. However, the ChatGPT users had a pareto distribution, where some performed significantly faster.

But many also performed significantly slower. Much slower than those who chose not to use ChatGPT. So even though I have not rigorously tested all of the hypotheses in this paper, this analysis already casts a lot of doubt on whether ChatGPT saves anyone time.

Did ChatGPT save users time, or were those users just statistically faster than the control group for the given tasks? Second, for those that used ChatGPT, why did it slow so many of the respondents down?

This uncertainty in the data is in alignment with anecdotal data from businesses using the Copilot beta at $30/seat, who say it's kind of neat, but they are unsure of the overall gains and wish it was significantly cheaper. $30/seat is roughly 0.6% of a typical workers salary.

Given this, it's not surprising that the study reviewed here doesn't really seem to show that ChatGPT saves a given person all that much time.

This is something that top tier journals like Science and Nature have fallen for in the past, chasing hype and lowering the bar for research in their journal to chase page views and citations. This article has already been cited hundreds of times, and it's garbage.

At this point, given the reality distorting hype cloud around gen AI, only profitable AI companies will convince me that this software provides meaningful gains in productivity. Because if it does, people will pay a lot more for it than $30/pp. <\end rant>

Here are the histograms for task 2 controlled for time to complete task 1. On a log-normal scale, these two populations fail a 95% confidence interval t-test with a p-value of 0.32. No effect.