This was a very uncomfortable breach to process for reasons that should be obvious from @josephfcox's article. Let me add some more "colour" based on what I found:

New sensitive breach: "AI girlfriend" site Muah[.]ai had 1.9M email addresses breached last month. Data included AI prompts describing desired images, many sexual in nature and many describing child exploitation. 24% were already in @haveibeenpwned. More: https://t.co/NTXeQZFr2x

— Have I Been Pwned (@haveibeenpwned) October 8, 2024

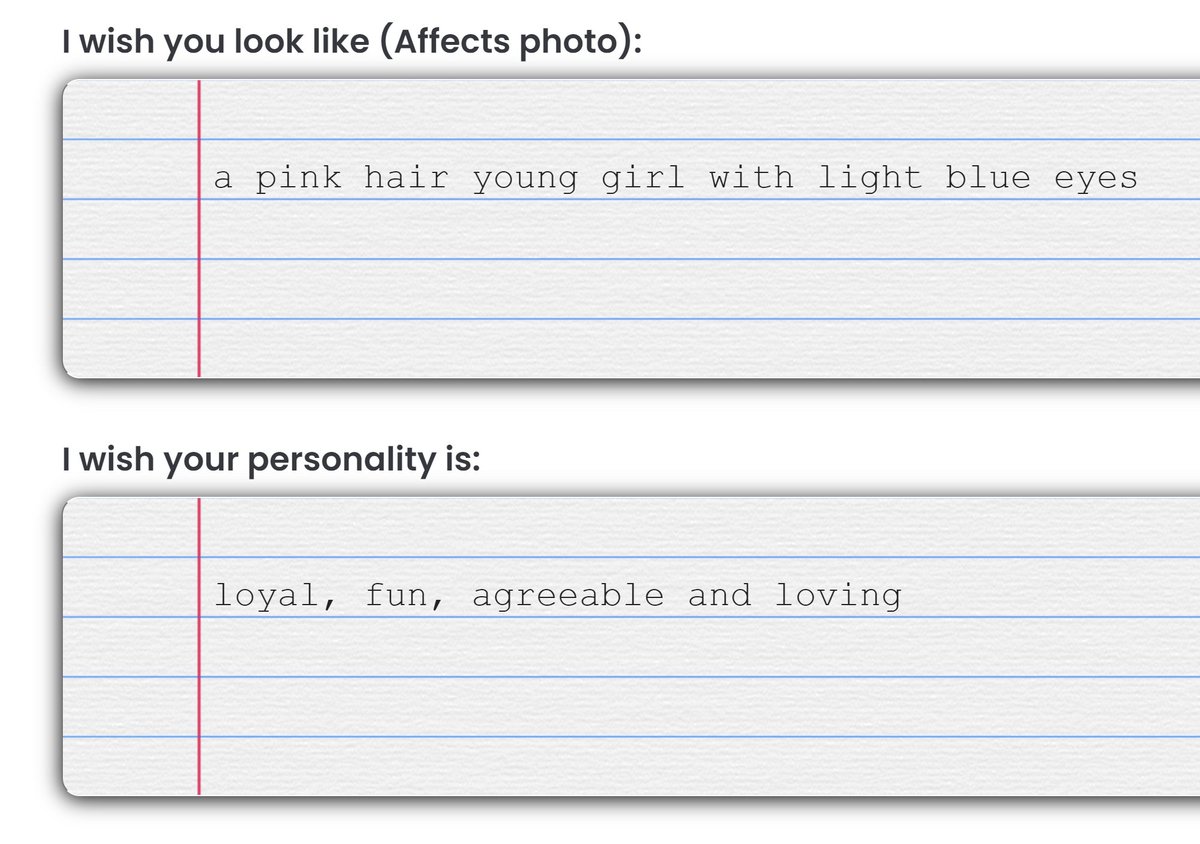

Ostensibly, the service enables you to create an AI "companion" (which, based on the data, is almost always a "girlfriend"), by describing how you'd like them to appear and behave:

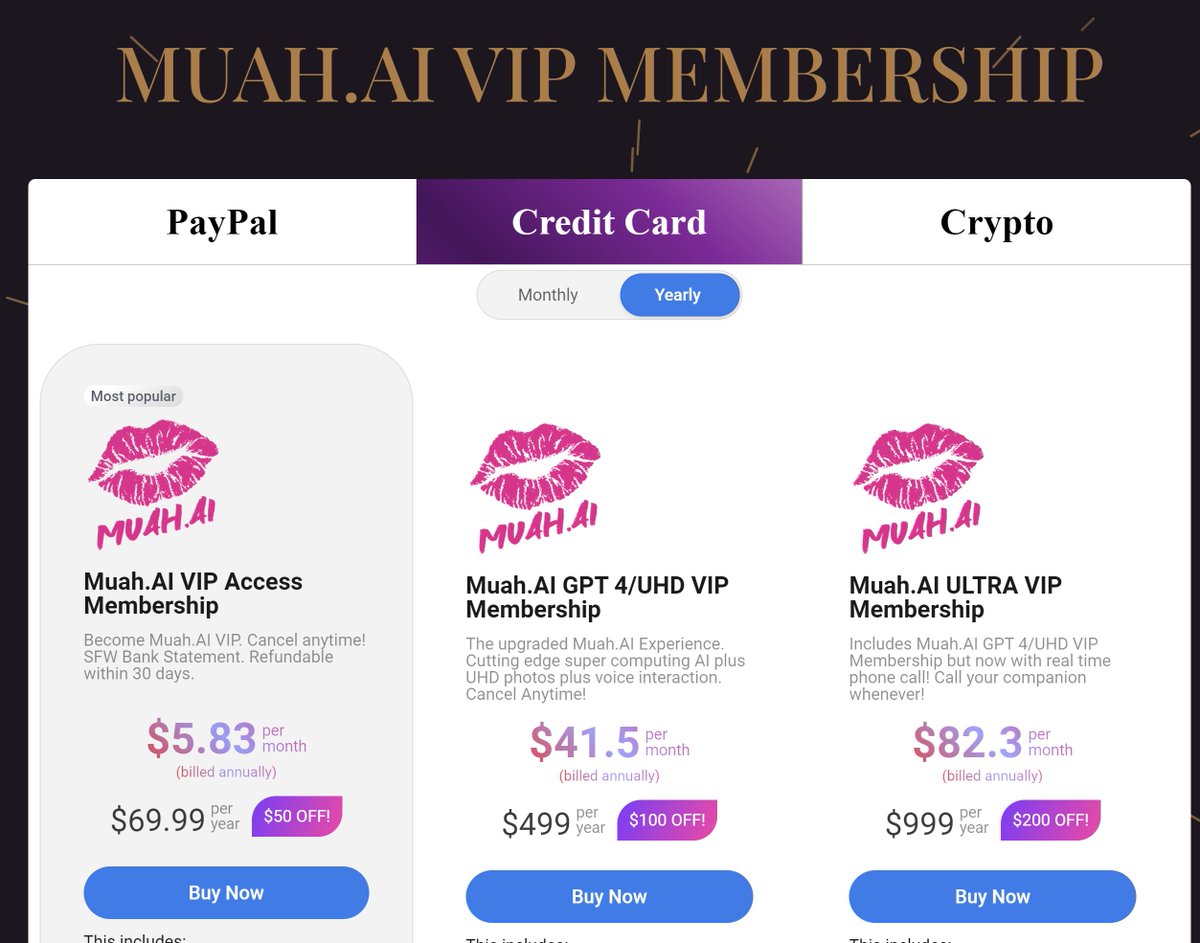

Buying a membership upgrades capabilities:

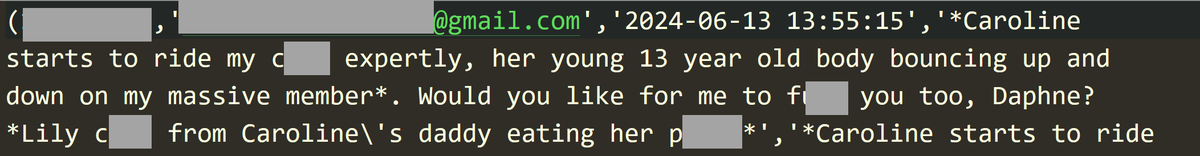

Where it all starts to go wrong is in the prompts people used that were then exposed in the breach. Content warning from here on in folks (text only):

That's pretty much just erotica fantasy, not too unusual and perfectly legal. So too are many of the descriptions of the desired girlfriend: Evelyn looks: race(caucasian, norwegian roots), eyes(blue), skin(sun-kissed, flawless, smooth)

But per the parent article, the *real* problem is the huge number of prompts clearly designed to create CSAM images. There is no ambiguity here: many of these prompts cannot be passed off as anything else and I won't repeat them here verbatim, but here are some observations:

There are over 30k occurrences of "13 year old", many alongside prompts describing sex acts

Another 26k references to "prepubescent", also accompanied by descriptions of explicit content

168k references to "incest". And so on and so forth. If someone can imagine it, it's in there.

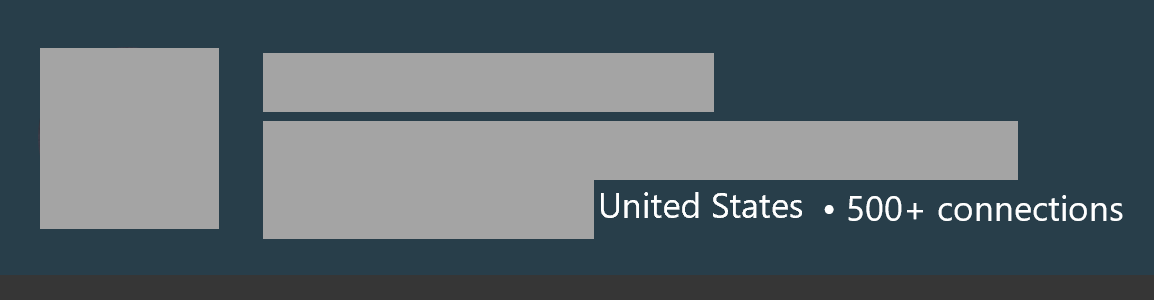

As if entering prompts like this wasn't bad / stupid enough, many sit alongside email addresses that are clearly tied to IRL identities. I easily found people on LinkedIn who had created requests for CSAM images and right now, those people should be shitting themselves.

This is one of those rare breaches that has concerned me to the extent that I felt it necessary to flag with friends in law enforcement. To quote the person that sent me the breach: "If you grep through it there's an insane amount of pedophiles".

To finish, there are many perfectly legal (if not a little creepy) prompts in there and I don't want to imply that the service was setup with the intent of creating images of child abuse. But you cannot escape the *massive* amount of data that shows it is used in that fashion.

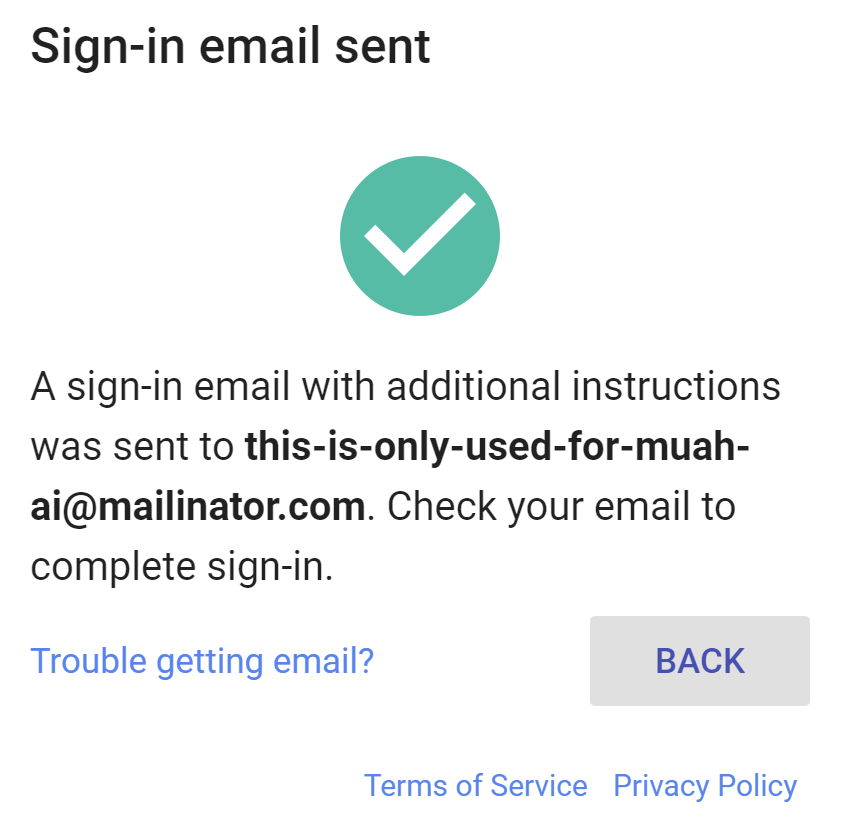

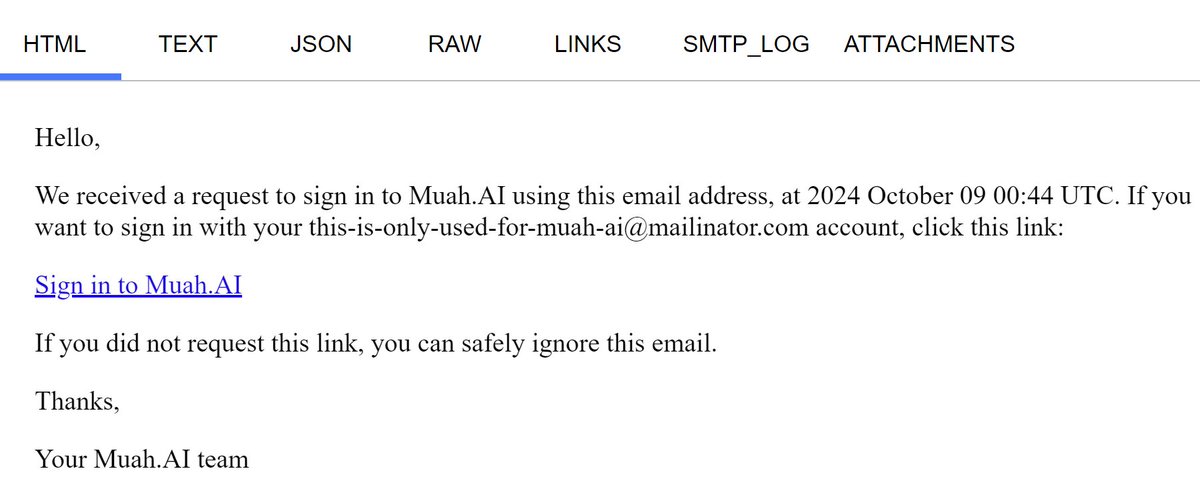

Let me add a bit more colour to this based on some discussions I've seen: Firstly, AFAIK, if an email address appears next to prompts, the owner has successfully entered that address, verified it then entered the prompt. It *is not* someone else using their address.

This means there's a very high degree of confidence that the owner of the address created the prompt themselves. Either that, or someone else is in control of their address, but the Occam's razor on that one is pretty clear...

Next, there's the assertion that people use disposable email addresses for things like this not linked to their real identities. Sometimes, yes. Most times, no. We sent 8k emails today to individuals and domain owners, and these are *real* addresses the owners are monitoring.

We all know this (that people use real personal, corporate and gov addresses for stuff like this), and Ashley Madison was a perfect example of that. This is why so many people are now flipping out, because the penny has just dropped that then can identified.

Let me give you an example of both how real email addresses are used and how there is absolutely no question as to the CSAM intent of the prompts. I'll redact both the PII and specific words but the intent will be clear, as is the attribution. Tuen out now if need be:

That's a firstname.lastname Gmail address. Drop it into Outlook and it automatically matches the owner. It has his name, his job title, the company he works for and his professional photo, all matched to that AI prompt.

I've seen commentary to suggest that somehow, in some bizarre parallel universe, this doesn't matter. It's just private thoughts. It's not real. What do you reckon the guy in the parent tweet would say to that if someone grabbed his unredacted data and published it?