1/9 🚨 New Paper Alert: Cross-Entropy Loss is NOT What You Need! 🚨 We introduce harmonic loss as alternative to the standard CE loss for training neural networks and LLMs! Harmonic loss achieves 🛠️significantly better interpretability, ⚡faster convergence, and ⏳less grokking! https://t.co/DratQQV8q0

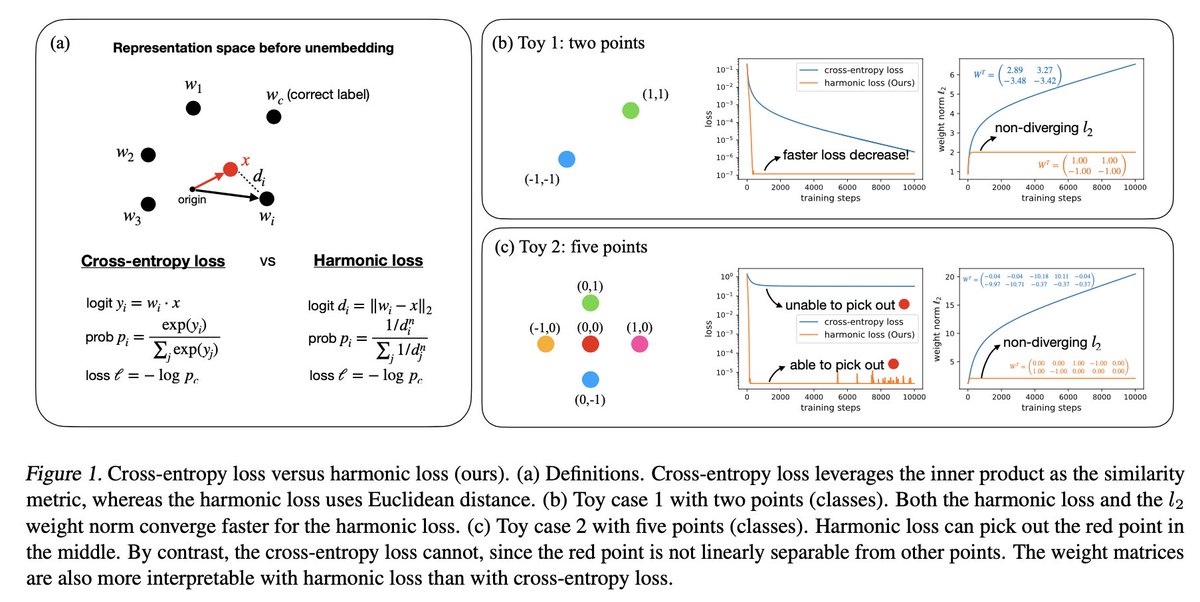

2/9 Instead of using inner-product and Softmax, harmonic loss leverages (a) Euclidean distance to compute the logit, and (b) scale-invariant HarMax function to obtain the probablity. This unlocks (1) nonlinear separability, (2) fast convergence, and (3) interpretability!

3/9 As an illustration, standard MLP trained for modular addition (a) needs strong weight decay to generalize, (b) groks severely, and (c) forms imperfect circle. In contrast, harmonic model generalizes quickly without grokking, and forms a perfect 2D circle.

4/9 We validate our proposal on a wide range of algorithmic datasets! Harmonic models do indeed learn PERFECT lattice, circle, tree, and other structures regardless of the random seeds!

5/9 Our experiment on algorithmic datasets also verifies that harmonic loss achieves better data efficiency and less grokking!

6/9 On MNIST dataset, we find that harmonic loss makes the model weights highly interpretable, which are images representing each number! Moreover, most peripheral pixels have weights that are almost exactly zero, in contrast to the model trained with CE loss.

7/9 When we train GPT-2 with harmonic loss, we observe that models tend to represent semantically related word pairs (e.g. man:woman::king:queen), in a more rectangular parallelogram structure -- Harmonic loss produces high-precision function vectors!

8/9 Looking forward, we believe harmonic loss will be an important ingredient to building AI models that are interpretable by design! We're excited to see works that apply harmonic loss to training even larger models and test its effectiveness!

9/9 This is a joint work with @ZimingLiu11, @riyatyagi86, and @tegmark! Check out the full paper and code here: paper: https://t.co/NlcM9GAbEg code: https://t.co/S1kWDZBz4k